In this article, we take a look at how ChatGPT works. We learned a lot from making this content, and we hope you will learn something, too. Let’s dive right in. ChatGPT was released on November 30, 2022. It reached 100 million monthly active users in just two months. It took Instagram two and a half years to reach the same milestone. This makes ChatGPT the fastest-growing app in history.

Understanding the Core: Large Language Models

The heart of ChatGPT is a Large Language Model (LLM). The current LLM for ChatGPT is GPT-4o. A Large Language Model is a type of neural network-based model that is trained on massive amounts of text data to understand and generate human language. The model uses the training data to learn the statistical patterns and relationships between words in the language and then utilizes this knowledge to predict subsequent words, one word at a time.

An LLM is often characterized by its size and the number of parameters it contains. The largest model of GPT-4 has 175 billion parameters spread across 96 layers in the neural network, making it one of the largest deep learning models ever created.

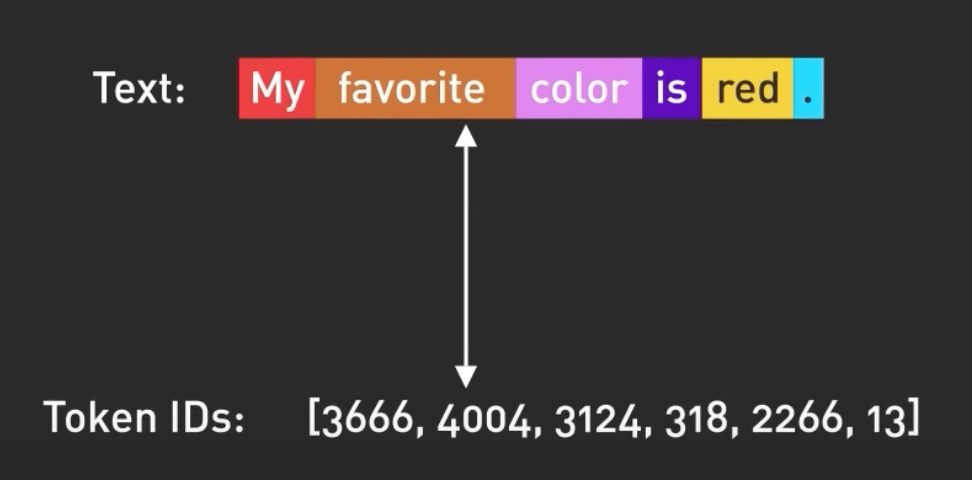

The input and output to the model are organized by tokens. Tokens are numerical representations of words or parts of words. Numbers are used for tokens rather than words because they can be processed more efficiently.

GPT-4 was trained on a large chunk of Internet data. The source dataset contains 500 billion tokens, equating to hundreds of billions of words.

Predictive Power and Challenges

The model was trained to predict the next token given a sequence of input tokens. It can generate text that is structured in a grammatically correct and semantically similar way to the internet data it was trained on.

However, without proper guidance, the model can also generate outputs that are untruthful, toxic, or reflect harmful sentiments. Even with that downside, the model is already useful but in a very structured way. It can be “taught” to perform natural language tasks using carefully engineered text prompts. This is where the new field of “prompt engineering” came from.

Fine-Tuning with RLHF

To make the model safer and capable of question and answer in the style of a chatbot, the model is further fine-tuned to become the version used in ChatGPT.

Fine-tuning is a process that aligns the model with human values, known as Reinforcement Learning from Human Feedback (RLHF). OpenAI explains how they ran RLHF on the model, but it can be complex for non-ML people to understand.

Imagine GPT-4 as a highly skilled chef who can prepare a wide variety of dishes. Fine-tuning GPT-4 with RLHF is like refining this chef’s skills to make their dishes more delicious. Initially, the chef is trained with a large dataset of recipes and cooking techniques. However, sometimes the chef doesn’t know which dish to make for a specific customer request. To help with this, feedback from real people is collected to create a new dataset.

Creating a Comparison Dataset

The first step is to create a comparison dataset. We ask the chef to prepare multiple dishes for a given request and then have people rank the dishes based on taste and presentation.

This helps the chef understand which dishes are preferred by customers.

Reward Modeling

The next step is reward modeling. The chef uses this feedback to create a “reward model,” which guides understanding customer preferences. The higher the reward, the better the dish. Next, we train the model with Proximal Policy Optimization (PPO).

The chef practices making dishes while following the reward model, comparing their current dish with a slightly different version, and learning which one is better according to the reward model. This process is repeated several times, refining their skills based on updated customer feedback.

Practical Application in ChatGPT

Now that we understand how the model is trained and fine-tuned, let’s take a look at how the model is used in ChatGPT to answer a prompt. Conceptually, it is as simple as feeding the prompt into the ChatGPT model and returning the output. In reality, it’s a bit more complicated.

Context Awareness

First, ChatGPT knows the context of the chat conversation. This is done by feeding the model the entire past conversation every time a new prompt is entered. This is called conversational prompt injection, which makes ChatGPT appear context-aware.

Prompt Engineering

Second, ChatGPT includes primary prompt engineering. These are pieces of instructions injected before and after the user’s prompt to guide the model for a conversational tone. These prompts are invisible to the user.

Moderation and Safety

Third, the prompt is passed to the moderation API to warn or block certain types of unsafe content. The generated result is also likely to be passed to the moderation API before returning to the user.

Conclusion

And that wraps up our journey into the fascinating world of ChatGPT. There was a lot of engineering that went into creating the models used by ChatGPT. The technology behind it is constantly evolving, opening doors to new possibilities and reshaping the way we communicate. Tighten your seat belt and enjoy the ride.

Read related articles: